So, IVO Ltd is getting ready to launch the IVO Quantum Drive, based on the theory of Quantized Inertia, into space., as a fuel-less, pure-electric method of satelite propulsion. The company claims that this "is not a reactionless system" and that they can "move spacecraft without fuel and without violating Newton’s laws of motion", but... it's not at all obvious how those two things can both be true at the same time.

It certainly looks like it would violate conservation of energy, conservation of momentum, and conservation of angular momentum, so I fully expect it to not work. If it does work, it'll increase job security for a lot of physicists, and either overturn all the basic conservation laws, or require explanation of why it's not really violating them after all; maybe space is actually quantized in a way that breaks the assumptions underlying Noether's theorem; maybe it's dumping momentum somewhere else in place of dumping it into local reaction mass; maybe the total energy of the universe is zero, and extra energy produced by the thruster is balanced by negative energy in the gravitational field. [1]

But regardless of how that all shakes out, the claimed effect of an IVO Quantum Thruster is that you put a particular amount of electrical power in, and you get force out, in a fixed ratio, without expelling any reaction mass. And that has implications.

It turns out there is one kind of fuelless thruster that really exists: the photon rocket. Or in other words, the flashlight! Photons have momentum, and apply forces, and you can make them with nothing but electricity--no need to carry fuel. That's why solar sails work. But, flashlights produce extremely tiny amounts of thrust per watt--obviously, because you don't have to worry about recoil when turning on a flashlight! In fact, a flashlight (or anything else that produces light pointed mostly in one direction) only produces about 3.33 micronewtons of thrust per kilowatt--or, in other words, requires 299,792,458 watts to produce 1 newton of thrust! If that's a familiar-looking number to you, that's because, in SI units, a watt divided by a newton has the same units as velocity, and the number you get out for the ratio of power to thrust for a photon is, in fact, the speed of light! 299,792,458 W / 1 N = 299,792,458 m/s.

If your fuelless thruster produces that amount of thrust or less, you might as well just use a flashlight. And if you are getting the energy to power it from solar panels, as IVO is, then you might as well use a solar sail, to get double the momentum from the bounce! If you can produce a better power-to-thrust ratio than that, then either you have disproven relativity and established the existence of an absolute reference frame, with absolute motion altering the power requirements of your device... or, if you have a fixed power consumption and a device that respects Galilean relativity, you have a free energy machine. A perpetual motion machine of the first kind. An Over-Unity Device. Maybe it's stealing energy from elsewhere in the universe, maybe it's separating negative energy in the gravitational field, but however you resolve the problems it has with Newtonian mechanics, you've got a device that can output more energy than you put in, without fuel.

Why? Simply because, given constant acceleration under a constant force, the energy consumed by the device grows linearly, but the kinetic energy of the device grows quadratically! Thus, there exists some critical speed at which the curves will cross, and the next incremental increase in speed from activating the thruster will result in the device gaining more energy as kinetic energy than it took to power the thruster to produce that acceleration.

So, why can't you turn a flashlight into an over-unity device? Well, we can calculate the critical speed with some pretty basic Newtonian mechanics. The work done by a force is force over distance. If a particular force is applied over a particular distance over a period of time, we get power: work done per time. So we just need to find the speed--distance over time--such that a force applied at that speed produces the same amount of power going into kinetic energy as it takes to power the device. With a little algebra, we get:

E = F * D

E/t = F * D/t

P = F * V

V = P / F

In other words, the critical velocity is the per-newton power consumption, and as we saw above, for a photon rocket, that is the speed of light! A flashlight can't go faster than the speed of light, because nothing can go faster than the speed of light, so you can never meet the conditions to produce excess energy. But if thrust per watt goes up, then the critical speed goes down, and then you've got an over-unity device. [2]

So, if the IVO Quantum Thruster, or some other fuelless propulsion device, actually worked as advertised, how could we use it to engineer a practical power plant?

Let's start out by defining some useful variables and formulas:

Pi: the electrical power input to the thruster.

η: the ratio of force to power input, such that Pi*η gives the thrust.

Vo: the velocity of the thruster under power-producing operation.

Pk = Pi*η*Vo: the power delivered as kinetic energy to the thruster (and any supporting structures).

ε: the efficiency coefficient for converting kinetic energy back into electrical energy.

Pe = ε*Pk: the electrical power produced by the device.

Po = Pe - Pi: the usable output power of the device, after some electrical power is used to continue powering the thruster.

G = Po/Pi = ε*η*Vo - 1: the amplification gain factor, or ratio between input and output power.

Vg = (G + 1)/(ε*η): the velocity required to achieve a desired gain factor after accounting for inefficiencies in the equipment. When G = 0, this is the minimum operating velocity.

The IVO thruster is supposed to have a max η of 52 millinewtons / watt. That is a ridiculously huge value. The Rocketdyne F1 engines which powered the first stage of the Saturn V rocket had a thrust-to-power ratio of about 0.77 millinewtons / watt, less than 1/65th as much. That makes the IVO thruster about 15,616 times more performant than a flashlight, which should be the physical limit, according to currently-known-and-accepted physics. In other words, if these thrusters actually work, they would not just be useful for stationkeeping and orbit boosting of satelites--you could replace a rocket enginer withem, cut the fuel load by a factor of 65, run the remaining liquid hydrogen and oxygen through a fuel cell to produce electrical power to run a bank of quantum thrusters, and use that to launch an entire Saturn 5 into orbit. And if we can build a free-energy device, we don't even need the fuel cells, so continuing with that...

Mechanical-to-electrical conversion efficiencies for alternators can be pretty high--80% to 90%--so let's go ahead and set ε to 0.8. This gives us a minimum operating velocity (with zero gain) of just over 24 m/s. Not all that fast!

So, suppose we set our operating velocity at 30m/s. That gives us a gain factor of just under 25%. If we design for 20 watts input power, producing just over 1 newton of thrust, we will thus generate just under 5 watts of excess power.

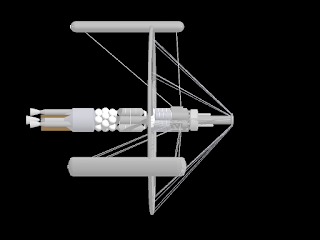

Now, 30m/s isn't ridiculously fast, but it is fast enough that building a linear track to shoot the thruster down would not be particularly convenient. Additionally, using a linear generator to recover excess energy would require pulsed operation, with time to reset the thruster at the beginning of the track after every run, or to slow down and reverse direction. So, let's just bend the thruster path into a circle! If we swing it around an arm with a 0.5 m radius, for a generator 1 meter in diameter, we get a radial acceleration of just under 184g. That might sound like a lot, but tiny benchtop laboratory centrifuges regularly get up to several tens of thousands of gs, and SpinLaunch is trying to build a centrifuge that can hold a small rocket under 10,000g. So, building a centrifuge that can hold a IVO Quantum Thruster under 184g seems very doable, and then it can just spin the shaft of an off-the-shelf alternator to produce power. 5 watts may not seem like very much, but suppose we increase the input wattage with the same gain factor? Stack up thrusters so that they can convert 800 W (about the power consumption of a typical microwave oven), and we'll get 198 W out. Increase Vo to 100 m/s, and the gain jumps to 3.16, corresponding to an output of 2528 W, and a load of only 2041g on the centrifuge. You'd want to armor the casing for this thing, but note that, unlike a flywheel battery, this thing isn't meant to store energy in rotation--so you'd want to make the centrifuge structure as light as possible, and minimize the danger of stored energy.

Bigger devices could operate at higher speeds and higher gain factors, and thus produce even higher wattages, because centripetal acceleration for a given tangential velocity goes down with radius. Suppose wanted to take this basic design and scale it up by a factor of 100: a 100-meter diameter municipal power station. At 30 m/s, that gives not-quite-2-gs of acceleration, but 100 meters is also the planned size of SpinLaunch's rocket centrifuge, so if we assume we can build for 10,000gs, that gives us an operating speed of just over 2,210 m/s, and a gain factor of 91. With space for a hundred times more thruster units around the rim, Pi goes up to 80kW, giving an output of ~7.28 megawatts--enough to power nearly 6,000 average American homes.

Now remember, these things wouldn't just violate conservation of energy--they violate conservation of angular momentum too, as a direct consequence of violating conservation of linear momentum. That introduces an entirely new sort of environmental hazard--it would take a long time, but a large number of these sorts of generators, if the fleet isn't properly balanced, would eventually start to have a noticeable impact on the rotation axis of the Earth! On shorter timescales, they would apply inconvenient continuous torques to any spaceships using them for power. Thus, you'd probably want to always build them in pairs, or build units with two counter-rotating coaxial centrifuges, to keep the accumulation of excess angular momentum at zero.

Now, suppose that the IVO thruster does end up failing, but you still want to use this idea for a science fictional device. Maybe you want to go with a less ridiculously aggressive efficiency for your fictional thruster--something comparable to a Rocketdyne F1, for example: η = .77 millinewtons / watt. Keeping our ε value of 0.8, that gives us a minimum operating velocity of 1626 m/s. A one-meter diameter centrifuge won't do in that case! You're looking strictly at larger-scale installations. Even a 100-meter centrifuge would already be operating at well over 5000g with a gain factor of 0! And handling higher gs is actually harder at larger sizes, since the larger centrifuge structure has to support itself under high accelerations as well as the constant-sized functional component--the thruster. If we go up to a 200-meter diameter installation, we could get an effective operating speed of 2220 m/s, for a gain of ~0.37, and an output of 58.6kW for a 160kW power plant, with accelerations just over 5000g--doubling the size of the centrifuge arms, but halving the acceleration they are under.

For more engineering safety margin and higher gain factors, we have to go bigger, and then taking up all of the space inside a gigantic disk for centrifuge arms starts to seem inconvenient--not to mention the mass of the centrfiguge structure itself! You don't want to have to lug all that around in the middle of your spaceship! As assumed η values go lower, the minimum viable size of a power plant goes up, and we transition into a regime where fewer and fewer spaceships can make use of them at all, so you've got an excuse to keep spaceships using other power sources while ground-based facilities can use giant free energy power plants. But remember, we don't actually care about storing kinetic energy, so we want to cut down on mass anyway--and all of the over-unity power generation happens in the reactionless thrusters out on the edge. So what if we throw away most of the centrifuge, and just have a ring of thrusters spinning in a circular track? Now, we can build large, spindly ring-shaped power plants with a gigantic hole in the middle that you can fit the rest of a spaceship in! Or giant ground-based rings, several kilometers in diameter, with dirt-and-rock backed tracks holding back rings with thousands of gs, and a city built inside--if anything goes wrong, the ring will explode outward leaving the ship or the city in the middle unharmed. And with all that extra space to stack more thruster units, even a fairly small gain factor could get you gigawatts of power output.

[1] It is generally believed that it should still be impossible to produce energy this way, even though it doesn't technically violate conservation, because if you can separate the vacuum into negative gravitational potential and positive useful energy, that would make the vacuum unstable, and that would be very bad for existence. But if the separation requires a macroscopic machine to achieve, and can't occur as a result of local single-particle interactions, we don't need to be as worried about triggering accidental vacuum decay.

[2] This paper (also linked previously) derives a value for the critical speed that's twice that big; that's because it is calculating a slightly different thing: the speed you have to accelerate to for the instaneous kinetic energy of the device to exceed the total energy used to accelerate it thus far. But, the point at which kinetic energy begins accumulating at a faster rate than energy is put in occurs significantly earlier.